How to Structure Lead Gen Facebook Ad Accounts For Testing & Scaling?

Got this question from a friend:

“How do I structure testing on Facebook ads?”

A huge question, even if you narrow it down to just conversion/lead generation campaigns. There’s the technical structure and creative testing process. So let’s tackle the technical structure first and I can write about the creative testing process later.

For the technical structure there are many approaches each with pros and cons. It seems to ultimately come down to your preference on:

creative control

management simplicity

spend efficiency

While most structures emphasize putting one business/product line per campaign, their particular adset and ad structure varies. Here are the 3 main setups:

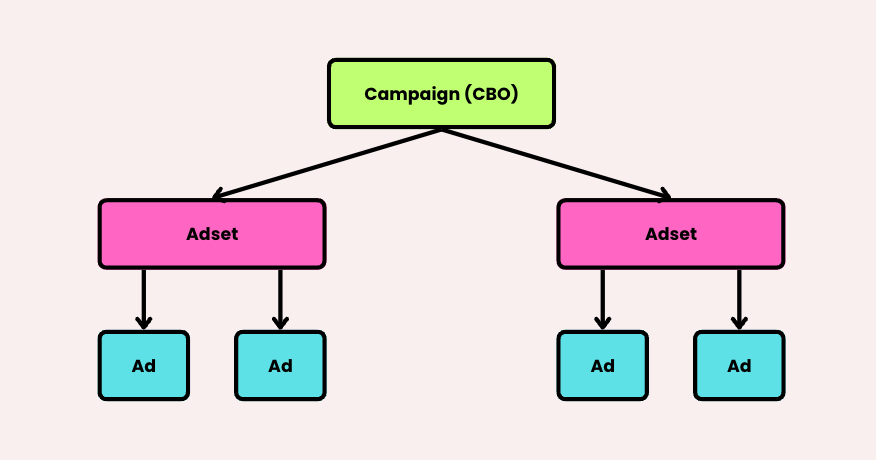

1. Plain Vanilla

One campaign for each business line with Advantage Campaign Budgeting (CBO). One adset per target audience (typically 1-3 adsets), and 2-5 ads in each adset. To optimize the account, you simply increase/decrease spend 20% every few days, turn off poor performing adsets and ads and replacement.

Pros:

Simple

Standard

No overlap on ads or targeting

Cons:

Poor control over which target gets more budget

Poor control over which ad gets more budget

Meta will naturally prefer videos over static images

Testing ads means editing adsets (by adding new ads and turning off old ones) so that resets learning.1

I typically use this approach for very small and simple ad accounts that don’t require much testing.

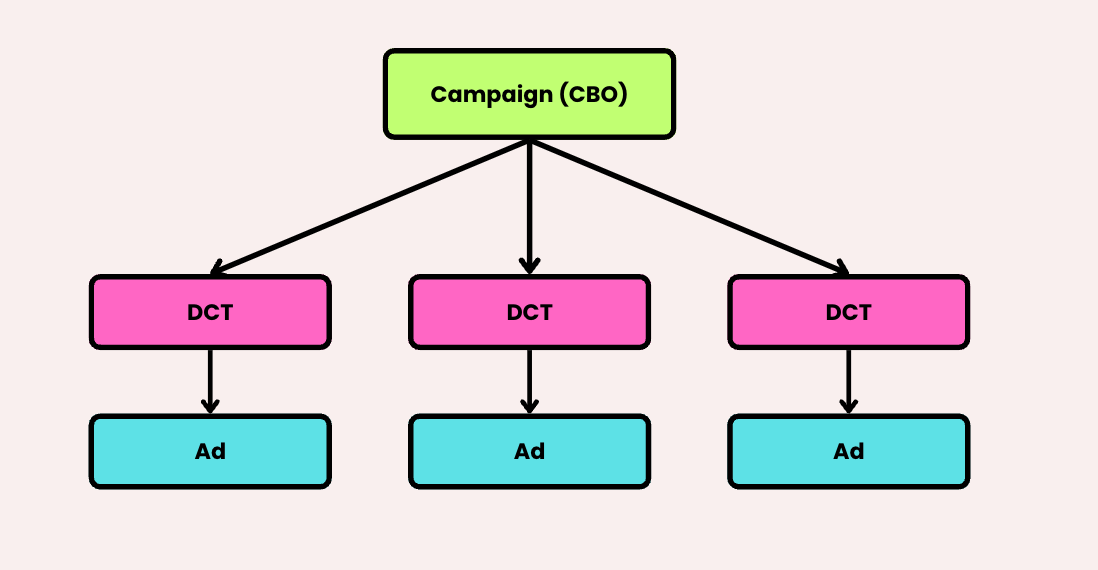

2. Automated

Popularized by Charlie T, this setup brings everything into 1 CBO campaign with 2-4 (for example) DCTs. To optimize the account, you increase/decrease spend 20% every few days, launch more DCTs and turn off underperforming ones over time.

Pros:

Nice and simple

Easy to manage and review performance

Cons:

Audience overlap

Creative overlap if you’re doing message testing. I.e. you’ll need to have two DCTs with the same creatives but different copy

Reliance on Meta to properly test/discover winners

Meta is likely to prioritise videos over images

This is awesome, if you really trust Meta. The argument driving this approach is that Meta’s AI knows best and giving it the steering wheel completely is likely the most efficient process.

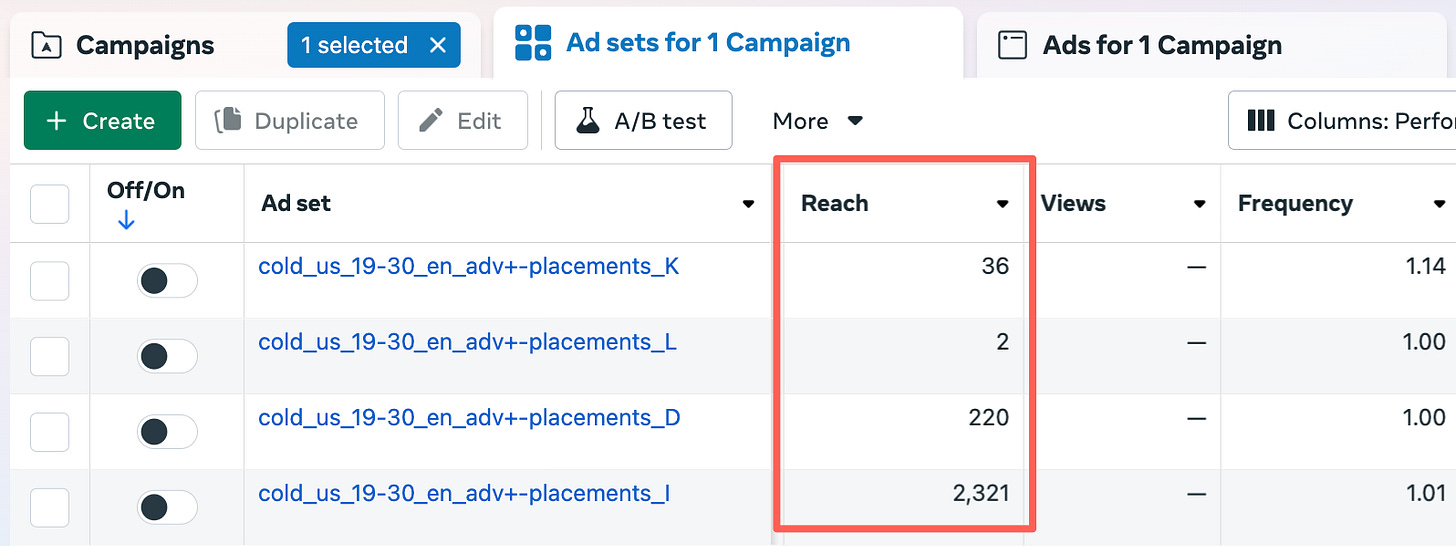

The problem I have with this approach is that you can’t trust Meta to give things a fair go. For example, I’ve launched new adsets to compete in the same CBO campaign, and they’ve received just 2 impressions for around 7+ days. How does Meta know whether those ads are good or not if they’ve not even tried them?

I’ve also had instances where an ad was given no attention by Meta via the CBO ad spend, but when given it’s own budget (forcing it by using adset level budgeting) it performs.

Those two experiences don’t gel with this rationale.

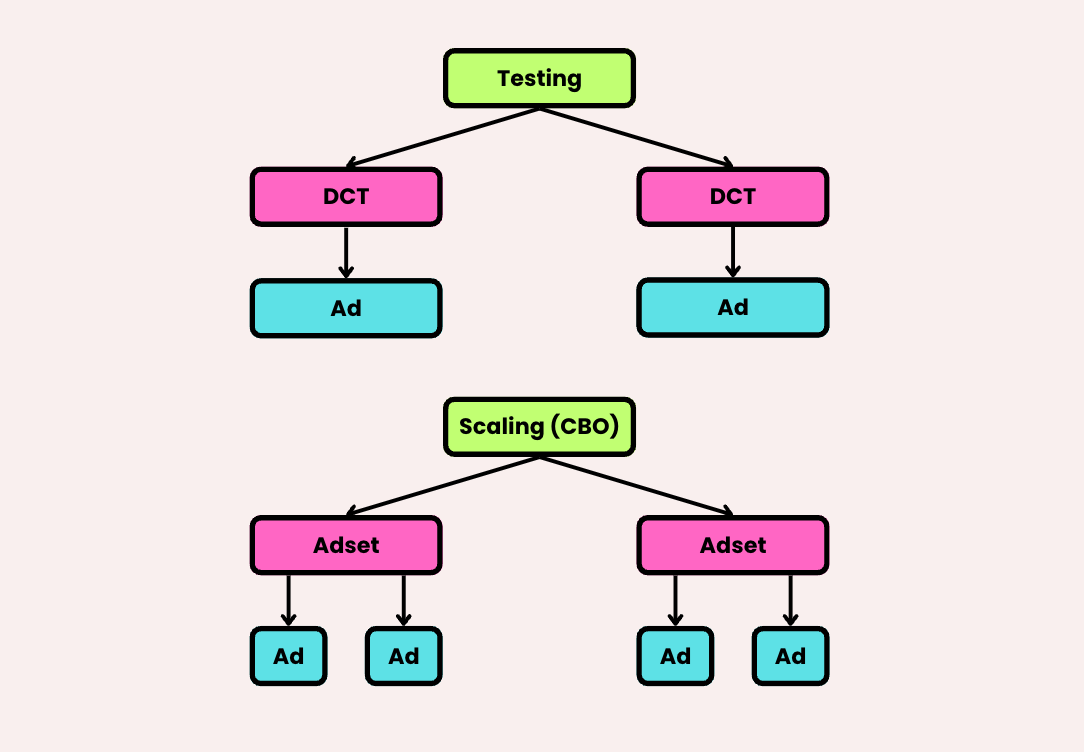

3. Separated Testing & Scaling

A blend of the two styles above, except better suited to identifying winners and spending more money on those.

You will have 2 campaigns here.

A testing campaign with adset budget optimization (ABO), with say 10-25% of your budget for testing ads. You’d run Dynamic Creative Tests (DCTs), using the Dynamic Creative option on the adset settings level.2 Which means 1 ad per adset. I see most advice saying to run 2-4 DCTs at a time.

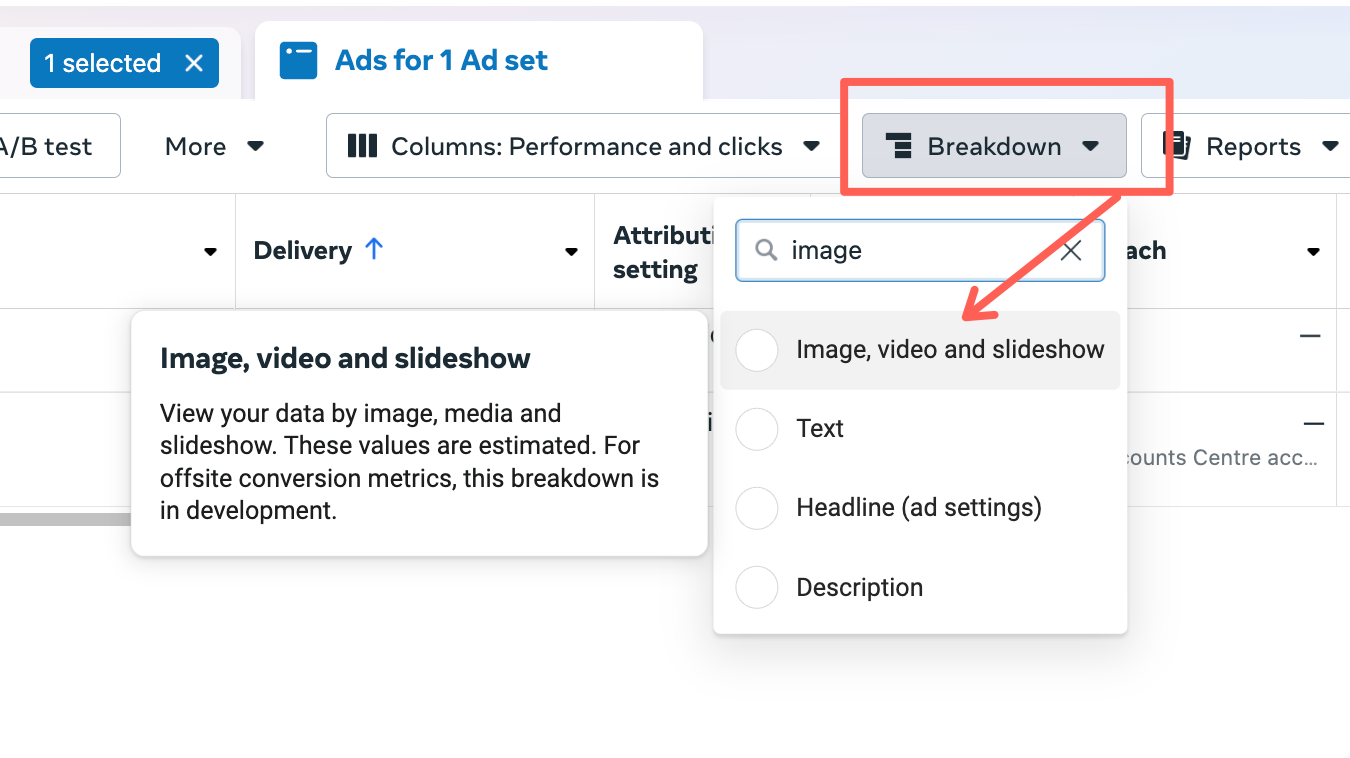

For your scaling campaign, it has 80-75% of your budget and uses the winning combinations of headlines, copy and creatives from the testing campaign to run them as ads in 1 or more adsets (depending on how many targeting audiences you have). You identify these using the breakdown report.3

It’s up to you whether you use CBO or ABO. It makes sense to me to use ABO if targeting different audiences.

Pros:

Better control over creative tests

You can also test different messaging angles by testing the same creatives in one adset vs another, but with different copy

More efficient adspend on your scaling campaign

Cons:

Overlap on campaigns

Overlap on targeting

Complex

Difficult to use for accounts with low spend (because 10% of a small budget doesn’t do anything)

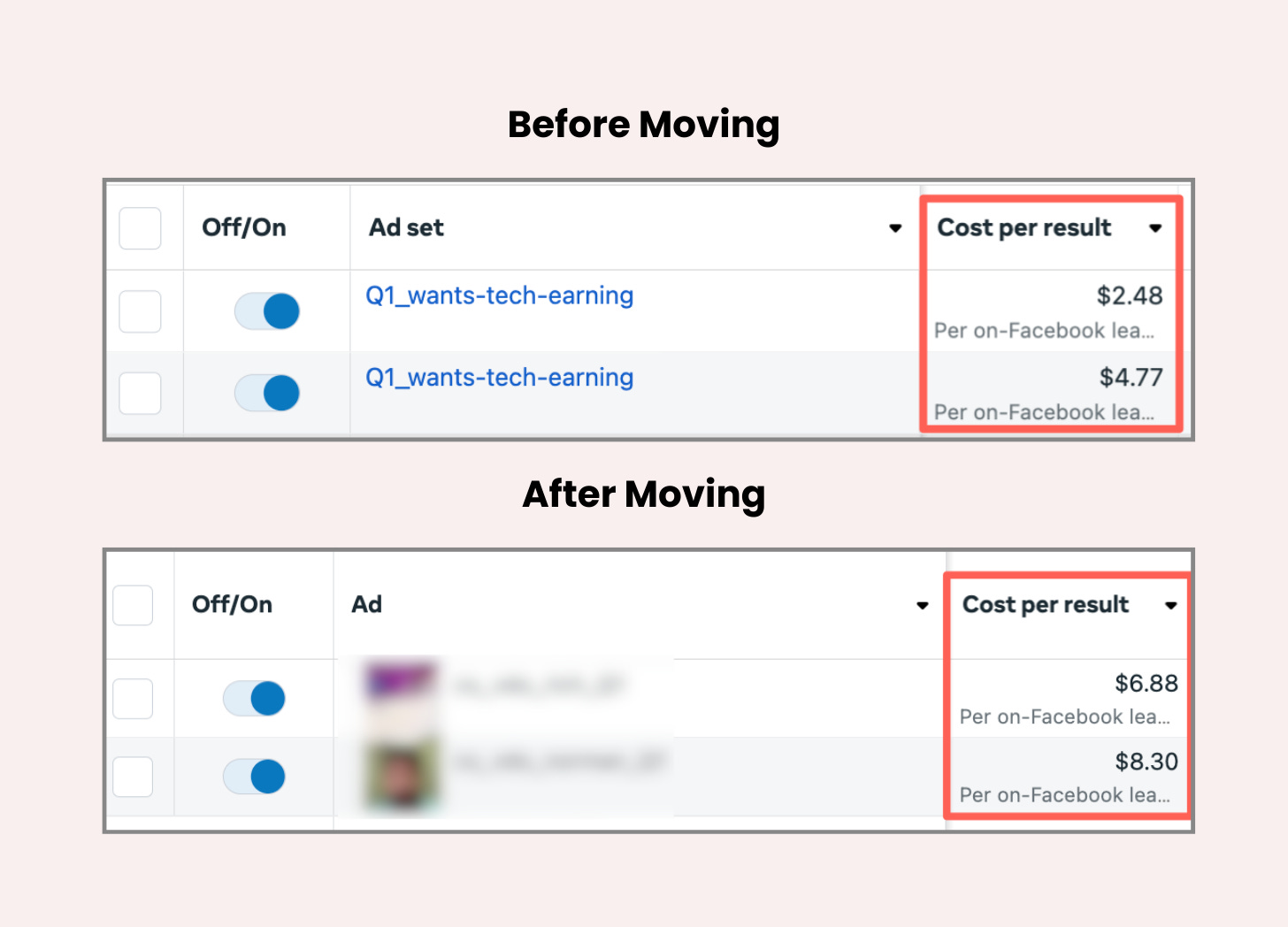

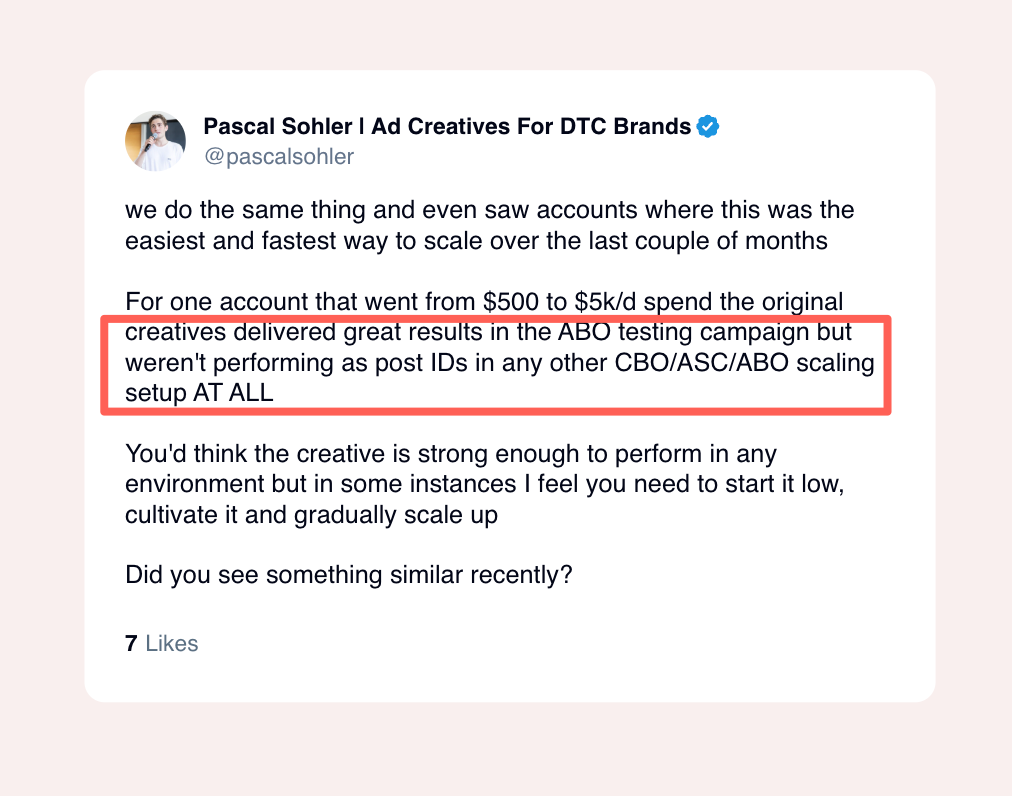

The biggest con about this approach is that ads identified as winners may not scale. Once you move an ad turn it off and rebuild it somewhere else, everybody knows that the learning resets.

Not just me experiencing this, either:

You might get lucky and your $5 cost per lead ad keeps performing that way. Or, once that same ad gets 10x the budget, it suddenly tanks because there wasn’t enough people interested in it anyway.

How do you overcome the limitations of each? Is there an ultimate setup?

I’ve been curious about this for a long time. So I’ve tried working backwards from what we know about the ads algorithm to engineer a structure that makes the best of all possible options.

So firstly, here are some common things known about the algorithm:

We shouldn’t touch what works, as it resets the learning phase.

You have to split video and static ads, because each format is prioritized differently. E.g. video ads get more love than static ads.

We can’t use DCTs to test messaging, unless you’re using only one angle, and then there’ll be too many ad sets. So we’ll have to test at the adset level.

Facebook’s official recommendation is to differentiate ads (meaning, the ad units themselves) primarily by creative, with no mention of copy.

Facebook’s A/B testing for copy/creative starts a new ad set. Not a new ad. Suggesting that they like using adsets for cleaner data for testing messaging.

Facebook wants you to combine adsets where possible.4

Facebook advises avoiding audience overlap.5

This data is also referenced and taken from their official study guides.

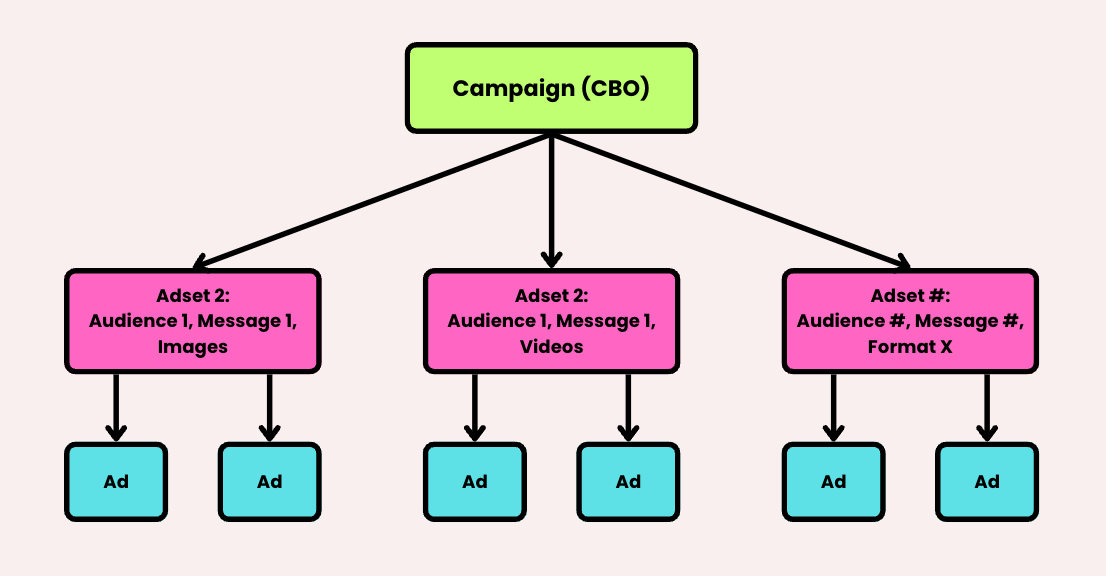

Phew, with these factors in mind, here’s the new structure I’ve come up with:

Setup:

A unified, single campaign with CBO.

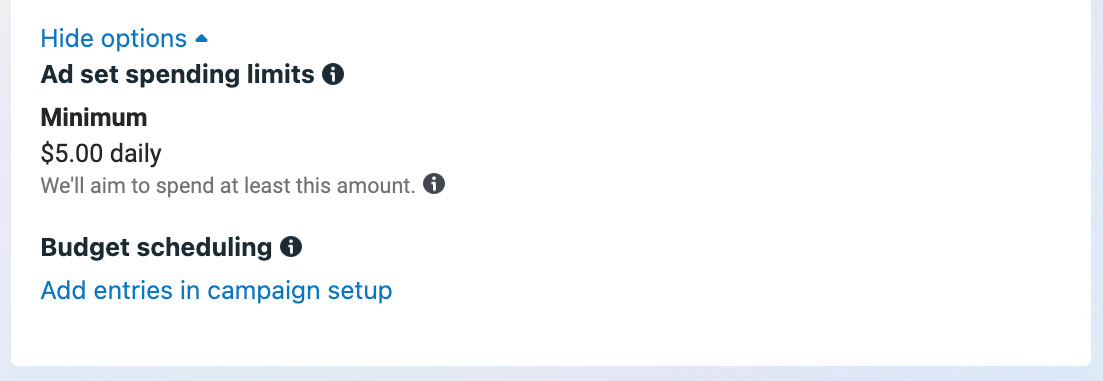

2-4 adsets for the combination of variables, with a minimum spend and for one:

Audience

Messaging

Static/video format

2-4 ads in each adset.

If you ran a software development career coaching business you would have:

1x landing page conversion campaign with CBO.

Adset 1: non-programmers, career changers, static images.

Adset 2: non-programmers, career changers, video.

Adset 3: programmers, promotion seekers, static images.

Adset 4, programmers, promotion seekers, video.

To optimize the performance, you’d launch new adsets to test either a new audience, messaging or round of creatives while turning off an under-performer. For example:

Adset 5, non-programmers, earn more money, static images

This way there is limited audience and creative overlap while keeping messaging and audiences separated by adsets. You’re also able to test multiple creatives in the same adset by not using DCTs.

Also, using the minimum spend setting makes sure your adsets don’t get completely ignored by CBO for testing purposes.

While I’m still testing this new structure, so far it looks to blend some of the best aspects of all setups.

But that’s just me. If you’re running ads, let me know your setup structure and your logic for building campaigns that way. I’d love to know!

This article is one of my free series of Facebook Lead Ad Tutorials. See the others in the series here:

Questions or feedback? Drop them in the comments below.

FYI, this feature is being depreciated I think.

This feature is also not working currently, for me.

Meta, Combine ad sets and campaigns in Meta Ads Manager to reduce audience fragmentation.

Meta, About overlapping audiences.